View | Artificial intelligence in 2024 marketing campaign ads wants regulation

Florida Gov. Ron DeSantis’s 2024 team posted manufactured depictions of Mr. Trump during his time as president hugging Anthony S. Fauci.

Donald Trump grew to become a family title by FIRING plenty of people *on tv*

But when it arrived to Fauci… pic.twitter.com/7Lxwf75NQm

— DeSantis War Home 🐊 (@DeSantisWarRoom) June 5, 2023

Mr. Trump, in flip, shared a parody of Mr. DeSantis’s considerably-mocked campaign launch featuring AI-generated voices mimicking the Republican governor as very well as Elon Musk, Dick Cheney and other folks. And the Republican National Committee released an advert stuffed with phony visions of a dystopian foreseeable future beneath President Biden. The superior information? The RNC integrated a take note acknowledging that the footage was developed by a equipment.

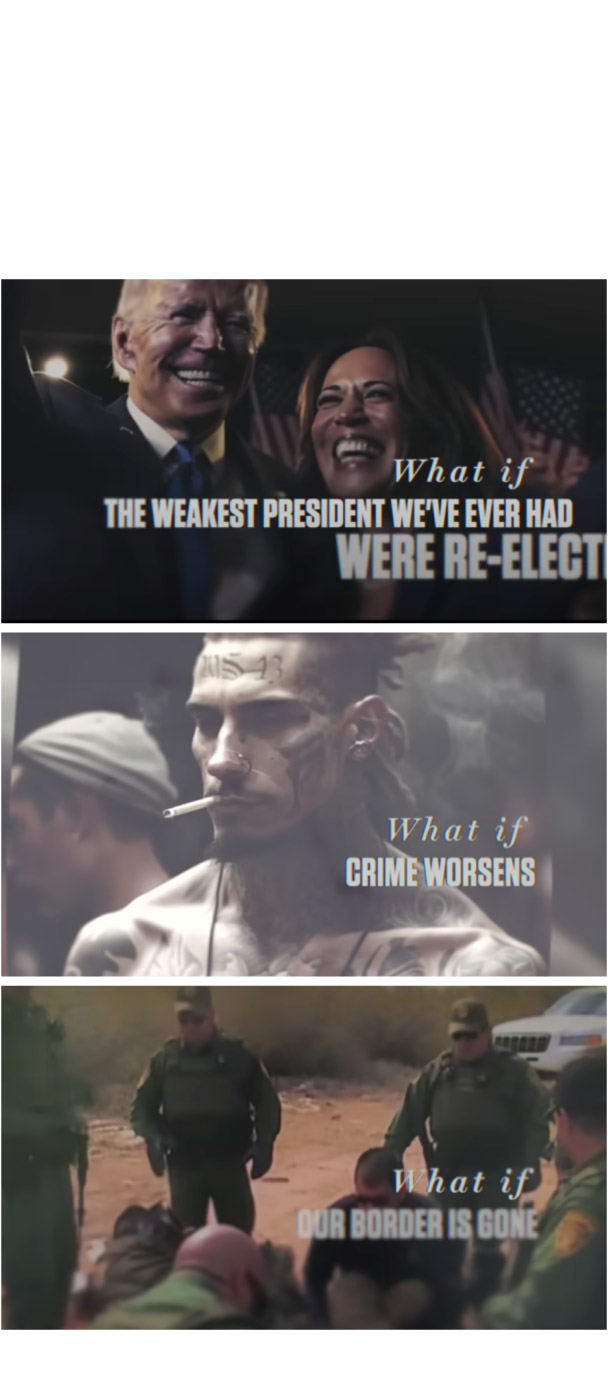

Screenshots from

RNC’s AI-produced ad

The Republican National Committee released

an ad in April solely illustrated with AI-

produced pictures that depicted a dystopian

upcoming if President Biden had been re-elected.

Source: Republican National Committee

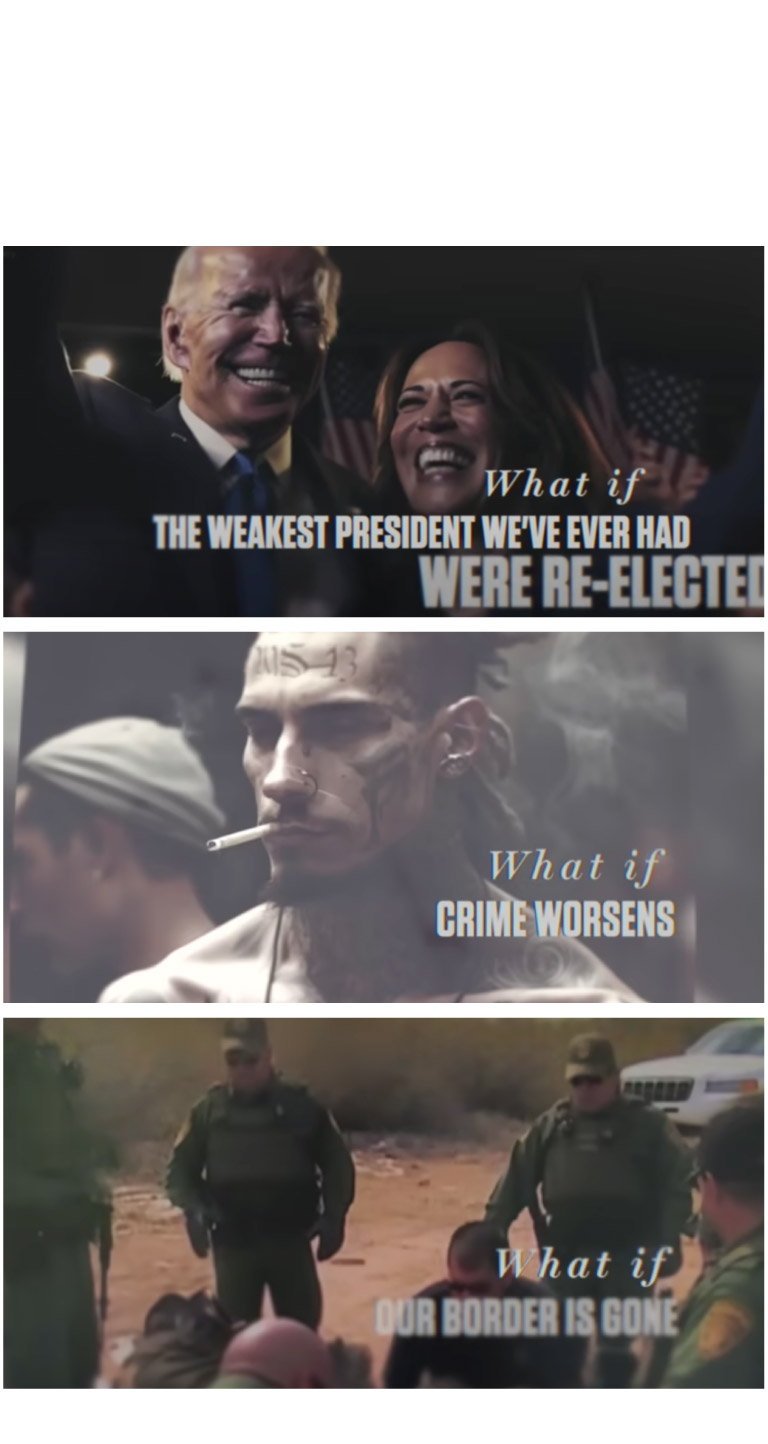

Screenshots from RNC’s AI-produced advertisement

The Republican Nationwide Committee launched an advertisement in

April entirely illustrated with AI-produced photos

that depicted a dystopian long term if President Biden

ended up re-elected.

Resource: Republican Nationwide Committee

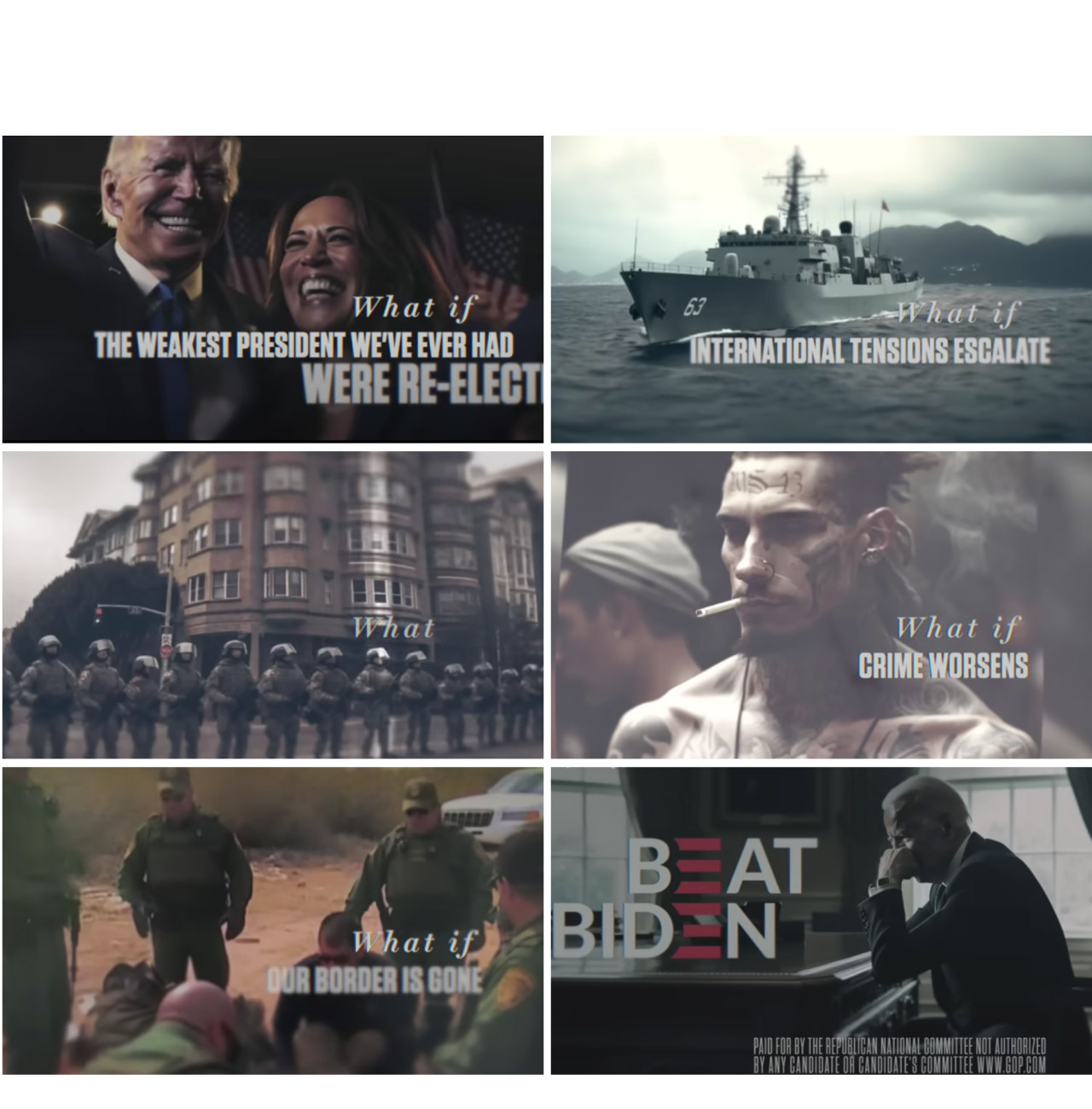

Screenshots from RNC’s AI-created ad

The Republican National Committee unveiled an advertisement in April fully illustrated with AI-created

photographs that depicted a dystopian upcoming if President Biden ended up re-elected.

Source: Republican National Committee

The lousy information is that there’s no ensure disclosure will be the norm. Rep. Yvette D. Clarke (D-N.Y.) has introduced a invoice that would demand disclosures identifying AI-created material in political ads. Which is a strong start out — and a essential one particular. But, greater but, the RNC, the Democratic Nationwide Committee and their counterparts coordinating condition and community races should go additional than disclosure alone, telling candidates to establish such materials in all their messaging, which include fundraising and outreach.

The party committees really should also think about getting some makes use of off the desk entirely. Substantial-language styles can be instrumental for compact strategies that can not afford to pay for to hire staff to draft fundraising e-mails. Even further-pocketed functions could stand to profit from personalizing donor entreaties or identifying very likely supporters. But all those legit takes advantage of are unique from simulating a gaffe by your opponent and blasting it out throughout the online or having to pay to place it on television.

Preferably, campaigns would chorus altogether from utilizing AI to depict fake realities — like, say, to render a metropolis exaggeratedly criminal offense-infested to criticize an incumbent mayor or to fabricate a assorted group of eager supporters. Identical outcomes could admittedly be realized with far more common photo-editing resources. But the possibility that AI will evolve into an ever more adept illusionist, as effectively as the probability that undesirable actors will deploy it to huge audiences, means it’s crucial to preserve a earth in which voters can (mostly) believe what they see.

Get together committees should do this rule-environment with each other, signing a pact, proving that the integrity of details in elections isn’t a partisan concern. And straightforward candidates should refrain of their have accord from dishonest methods. Realistically, however, they’ll will need a push from regulators. The Federal Election Commission already has a stricture on the publications prohibiting the impersonation of candidates in marketing campaign advertisements. The agency just lately deadlocked about analyzing no matter if this authority extends to AI pictures. The commissioners who voted no on opening the issue to public comment really should reconsider. Even improved, lawmakers ought to explicitly grant the agency the authority to step in.

There are a good deal of good reasons to worry about what the increase of AI will do to our democracy. Persuading overseas adversaries as properly as domestic mischief-mongers not to sow discord is probably a missing trigger. Platforms’ position of rooting out disinformation has grow to be all the more critical now that improved lies can be explained to to so a lot of persons for so small income — and all the far more tough. Congress is performing on a wide-based framework to control AI, but that will consider months or even many years. There’s no justification for government not to choose lesser measures ahead on the route instantly in front of it.

/cloudfront-us-east-1.images.arcpublishing.com/gray/MZZ6VZA235A7XOAVDRAO3AOUWQ.jpg)