Preparing for the ‘golden age’ of artificial intelligence and machine learning

Table of Contents

Can businesses trust decisions that artificial intelligence and machine learning are churning out in increasingly larger numbers? Those decisions need more checks and balances — IT leaders and professionals have to ensure that AI is as fair, unbiased, and as accurate as possible. This means more training and greater investments in data platforms. A new survey of IT executives conducted by ZDNet found that companies need more data engineers, data scientists, and developers to deliver on these goals.

The survey confirmed that AI and ML initiatives are front and center at most enterprises. As of August, when ZDNet conducted the survey, close to half of the represented enterprises (44%) had AI-based technology actively being built or deployed. Another 22% had projects under development. Efforts in this space are still new and emerging — 59% of surveyed enterprises have been working with AI for less than three years. Survey respondents included executives, CIOs, CTOs, analysts/systems analysts, enterprise architects, developers, and project managers. Industries represented included technology, services, retail, and financial services. Company sizes varied.

Swami Sivasubramanian, VP of machine learning at Amazon Web Services, calls this the “golden age” of AI and machine learning. That’s because this technology “is becoming a core part of businesses around the world.”

IT teams are taking a direct lead in such efforts, with most companies building their systems in-house. Close to two-thirds of respondents, 63%, report that their AI systems are built and maintained by in-house IT staff. Almost half, 45%, also subscribe to AI-related services through Software as a Service (SaaS) providers. Another 30% use Platform as a Service (PaaS), and 28% turn to outside consultants or service firms.

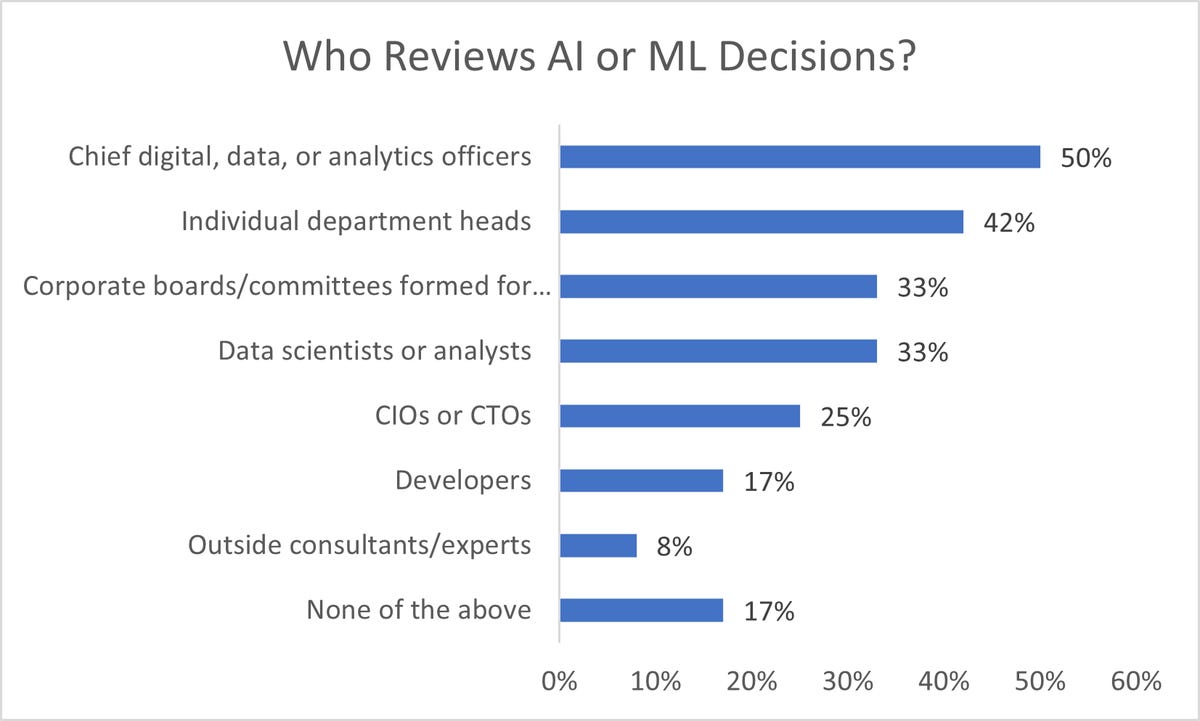

Chief digital officers, chief data officers or chief analytics officers usually take the lead with AI and ML-driven output, with 50% of respondents identifying these executives as primary decision-makers. Another 42% say individual department heads play a role in the process, and 33% of surveyed organizations have corporate committees that exercise AI oversight. One-third of these organizations assign AI and ML responsibilities to data scientists and analysts. Interestingly, CIOs and CTOs only weigh in at 25% of the respondents’ companies.

At the same time, AI has not fully permeated the day-to-day jobs of IT professionals and other employees. For example, only 14% of respondents could say that most of their IT workforces work directly with or have access to AI technologies in their daily routines.

Implementation is hard

“Implementing an AI solution is not easy, and there are many examples of where AI has gone wrong in production,” says Tripti Sethi, senior director at Avanade. “The companies we have seen benefit from AI the most understand that AI is not a plug-and-play tool, but rather a capability that needs to be fostered and matured. These companies are asking ‘what business value can I drive with data?’ rather than ‘what can my data do?'”

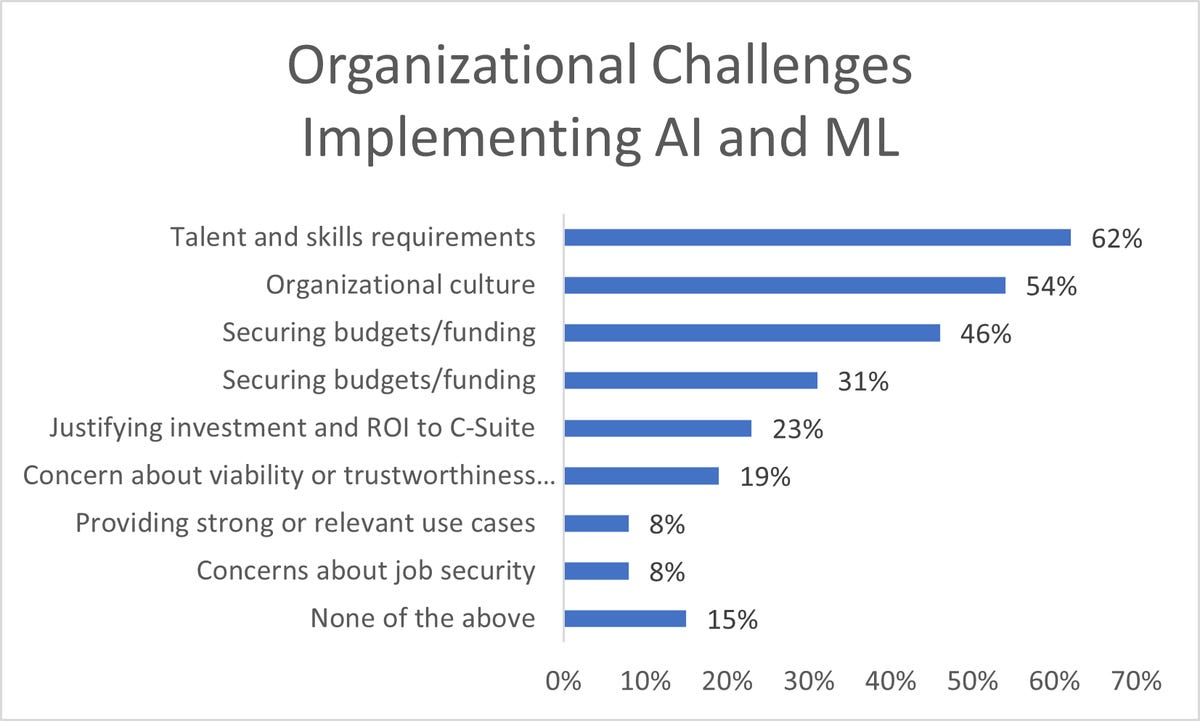

Skills availability is one of the leading issues that enterprises face in building and maintaining AI-driven systems. Close to two-thirds of surveyed enterprises, 62%, indicated that they couldn’t find talent on par with the skills requirements needed in efforts to move to AI. More than half, 54%, say that it’s been difficult to deploy AI within their existing organizational cultures, and 46% point to difficulties in finding funding for the programs they want to implement.

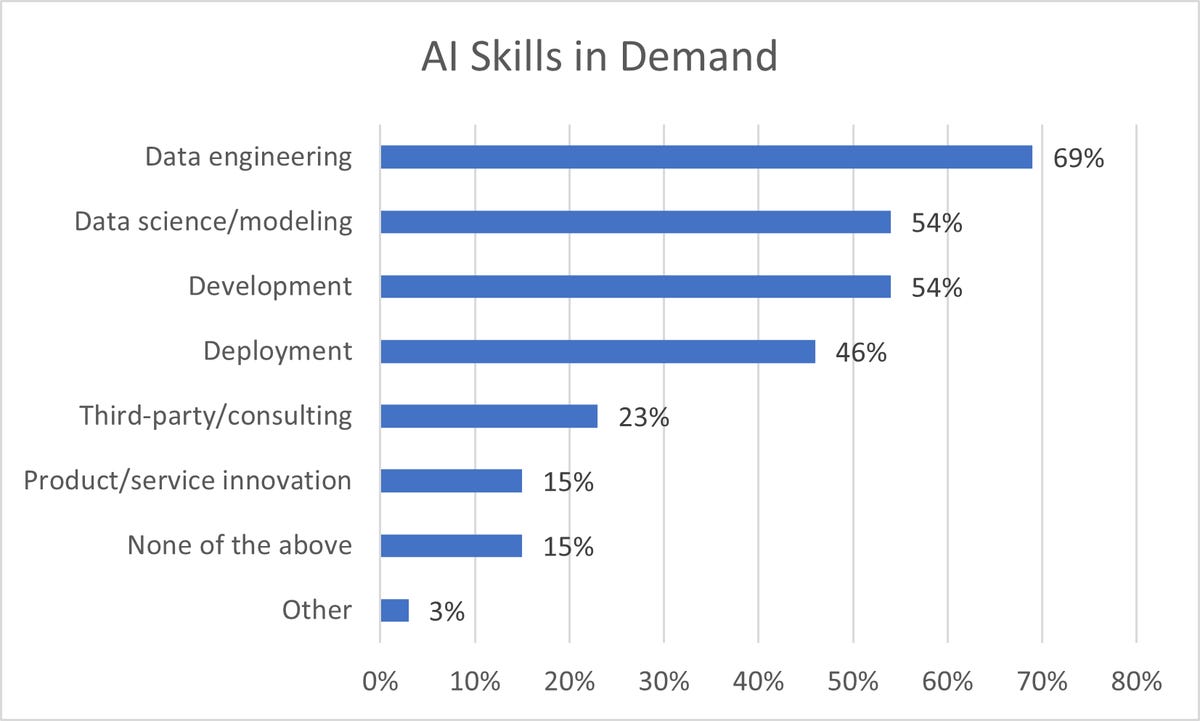

Data engineering is the most in-demand skill to support AI and ML initiatives, and it was cited by 69% of respondents. AI and ML algorithms are only as good as the data that is fed into them, so employees with data expertise are essential in validating, cleaning, and assuring responsive delivery of the data. Apart from data engineering, enterprises want data scientists to develop data models and developers to build the algorithms and supporting applications.

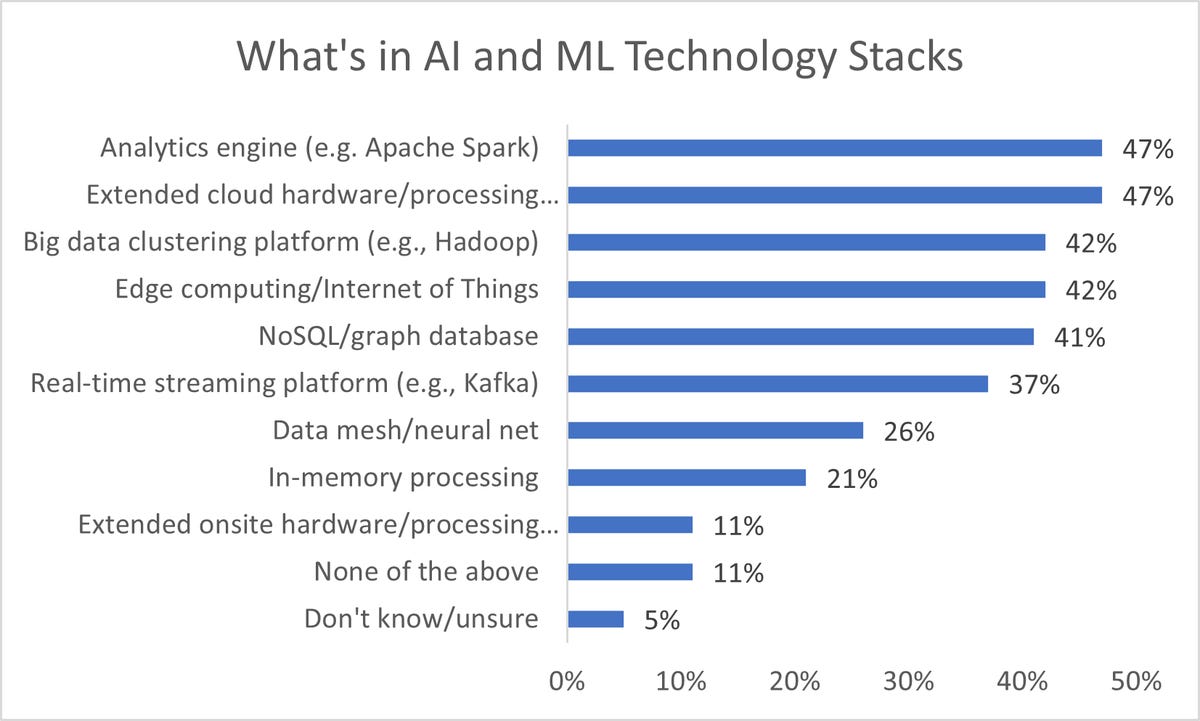

Almost half of enterprises, 47%, purchase extending processing capacity through a third-party or cloud provider. This is the leading hardware area purchased in the realm of technologies. Only 11% of enterprises purchase hardware or systems for on-site implementations. At least 42% are working with Internet of Things (IoT) devices and networks to support their AI efforts. In terms of AI-related software, 47% are working with analytics engines such as Apache Spark. Another 42% are working with big data clustering platforms such as Hadoop, as well as 42% deploying advanced databases.

“For many newer users, analytics is not a skill that they have, which results in outsourcing as a viable alternative,” says David Tareen, director of AI and analytics for SAS. For example, established and well-understood analytic functions may require outside help for “micro-targeting, finding fraudulent transactions.” Tareen says that new projects requiring new data sources plus innovative and advanced analytics and AI methods may include computer vision or conversational AI. “The project requires complete transparency on algorithmic decisions. These types of projects are more difficult to execute, but also offer new sources of revenue and unique differentiation.”

AI bias

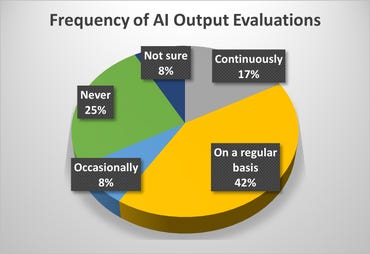

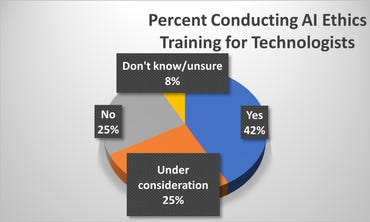

In recent months and years, AI bias has been in the headlines, suggesting that AI algorithms reinforce racism and sexism. In addition, reliance on AI introduces a trust factor, as business leaders may be leaving crucial decision-making to unattended systems. How far along are corporate efforts to achieve fairness and eliminate bias in AI results? The data shows that they’re not that far, with 41% of respondents indicating there are few, if any, checks on their AI output or they simply aren’t aware if such checks take place. Only 17% indicated they conduct checks on AI output on a continuous basis. Further, 58% of organizations do not yet provide AI ethics training to technologists or simply don’t know if such training is available.

Are companies doing enough to review their AI results regularly? What’s the best way to do this? “Comprehensive AI review requires a multi-faceted approach,” says Kimberly Nevala, AI strategic advisor at SAS. “The data, operating environment, algorithm, and business outcomes must be routinely assessed both prior to and throughout solution development and deployment. This requires engaging a multitude of disciplines, including business process/performance management, data management, model management, security, IT operations, risk and legal. Many companies are doing discrete components well, but few provide robust, comprehensive coverage. Not because they do not recognize the need, but because it’s complicated.”

58% of organizations do not yet provide AI ethics training to technologists or simply don’t know if such training is available.

In many cases, particularly in highly regulated industries, “most of the required practices exist,” Nevala said. “However, they haven’t been employed against analytic systems in a coordinated manner. Even more often, reviews are limited to point-in-time checks — profiling training data, validating model performance during testing or periodic reporting of business outcomes.”

However, there are measures to fight bias that are succeeding. “Skews in data can be identified using common data analysis techniques and methods to explain the underlying logic of some algorithms that exist,” Nevala said. “Rigorous testing can expose hidden biases, such as a hiring algorithm weeding out all women. So, while still nascent, organizations are getting better at looking for and identifying bias.”

However, the issue is deciding whether a given bias is fair or not, Nevala continues. “This is where fairness comes in. Adding to the complexity, an unbiased system may not be fair, and a fair system may be biased — often by design. So, what is fair? Are you striving for equity or equality? Do your intended users have equal access or ability to use the solution? Will the human subject to the solution agree it is fair? Is this something we should be doing at all? These are questions technology cannot answer. Addressing fairness and bias in AI requires diverse stakeholder teams and collaborative teaming models. Such organizations models are emerging, but they are not yet the norm.”

“IT leaders undoubtedly need more training and awareness about real-world and AI bias,” Dr. Michael Feindt, co-founder and strategic advisor to Blue Yonder, advocates. “Since the world is inherently unbalanced, the data reflecting the current world and human decisions is naturally biased. Until IT leaders and staff take accountability for their AI software’s unconscious bias, AI will not address discrimination, especially if it’s only relying upon historical human or discriminatory evidence to make its decisions. Explicitly minimizing bias in historical data and employing inherently fair AI-algorithms wields a very powerful weapon in the fight against discrimination.”

The process needs to be open and available to all decision-makers inside and outside of IT. Creating responsible AI “requires specific tools and a supporting governance process to balance the benefit-to-risk trade-offs meaningfully,” says Kathleen Featheringham, director of artificial intelligence strategy at Booz Allen. “These are foundational elements required to put responsible AI principles and values into practice. Organizations must be able to make all data available in a descriptive form to be continually updated with changes and uses to enable others to explore potential bias involving the data gathering process. This is a critical step to help identify and categorize a model’s originally intended use. Until this is done in all organizations, we can’t eliminate bias.”

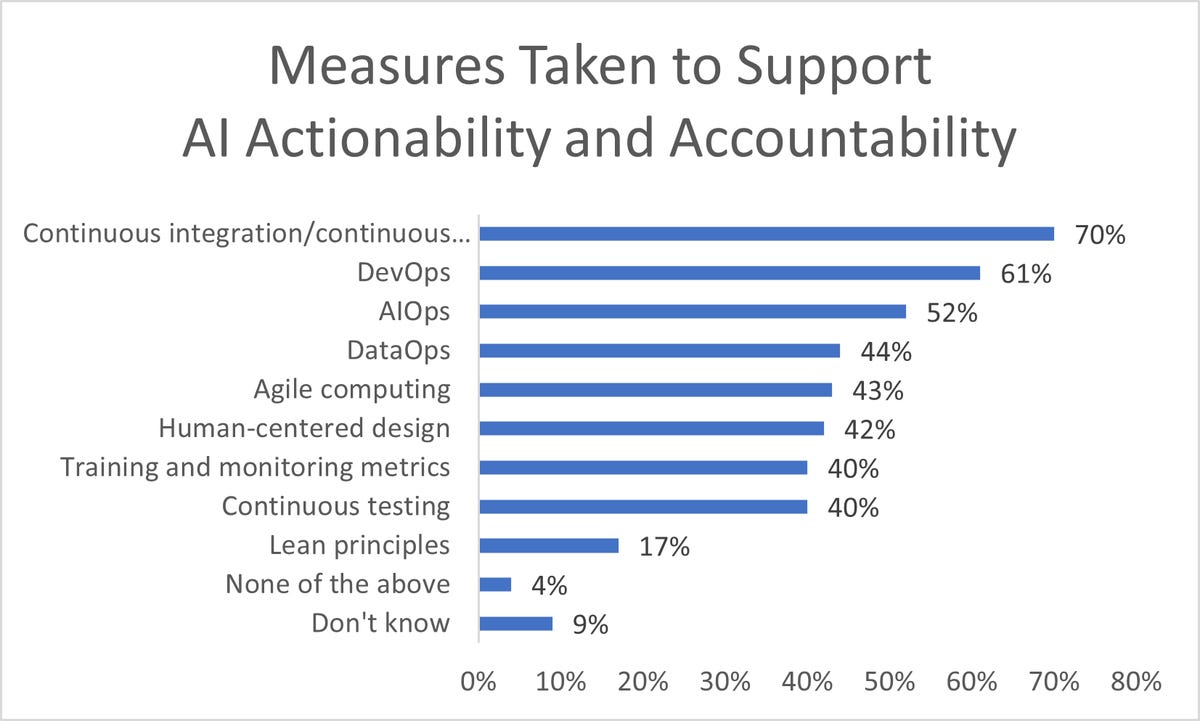

There are a number of ways IT leaders and AI proponents can help address issues with AI actionability and accountability. 70% are pursuing continuous integration/continuous deployment (CI/CD) approaches to their AI and ML work to assure constant checks on the composition of algorithms, associated applications, and the data going through them. DevOps — which aligns and automates the activities of developers and operations teams — is seen at 61% of organizations. AIOps — for artificial intelligence for IT operations and designed to apply AI to manage IT initiatives — is being used at more than half the companies in the survey (52%). DataOps, intended to manage and automate the flow of data to analytic platforms — is in play at 44% of organizations, as are agile computing approaches, employed by 43%.

AIOps, in particular, is a powerful methodology for delivering AI capabilities across a complex enterprise with many different agendas and requirements. “Successfully moving pilot projects into operations requires us to think about adoption and deployment holistically and from an enterprise perspective,” says Justin Neroda, vice president for Booz Allen, which supports more than 120 active AI projects. “We’ve created an AIOps engineering framework focused on the critical elements needed to overcome the post-pilot challenges of responsibly developing AI. AIOps can bring many technical benefits to an organization, including reducing the maintenance burden on individual analysts while maximizing subject matter experts’ productivity and satisfaction. This approach drives the ability to deploy preconfigured AIOps pipelines across a range of environments rapidly. It allows for the automation of model tracking, versioning, and monitoring processes throughout the development, testing, and fielding of AI models.”

MLOps methodologies

Related to these methodologies is MLOps, which Chris McClean, director and global lead for digital ethics at Avanade, advocates as a path to deploy and maintain machine learning models into production effectively. “MLOps methodologies not only avoids the common mistakes we have seen other companies make but sets up the organization for a future full of continuous successful AI deployment,” McClean says. He also advocates extensive use of automation and automation tools “to better measure and improve KPIs.”

Industry experts cite the following steps to a successful AI and ML journey which secures trust and viability in the delivery of outcomes:

- Focus on the business problem: “Look for places where there is already a lot of untapped data,” says Sivasubramanian. “Avoid fixing something that isn’t actually broken, or picking a problem that’s flashy but has unclear business value.”

- Focus on the data: Sethi recommends adopting a “modern data platform” to supply the fuel that makes AI and ML work. “Some of the key areas we have seen clients begin their AI journey are in sales optimization, customer journey analytics, and system monitoring. To further drive scale and accessibility to data, establishing a foundational data platform is a key element as it unlocks the structured and unstructured data and that drive these underlying use cases.” In addition, managing data can take up a majority of the time of AI teams, says Sivasubramanian. “When starting out, the three most important questions to ask are: What data is available today? What data can be made available? And a year from now, what data will we wish we had started collecting today?”

- Work closely with the business: “IT delivers the infrastructure to model the data, while subject matter experts use the models to find the right solutions,” says Arthur Hu, senior vice president and CIO at Lenovo. “It’s analogous to a recipe: It’s not about any one ingredient or even all of the ingredients; it takes the right balance of ingredients working together to produce the desired result. The key to ensuring that AI is used fairly and without bias is the same key to making it successful in the first place: humans steering the course. AI’s breakthroughs are only possible because experts in their fields drive them.”

- “Watch out for AI “drift:” Regularly reviewing model results and performance “is a best practice companies should implement on a routine basis,” says Sivasubramanian. “It is important to regularly review model performance because their accuracy can deteriorate over time, a phenomenon known as model drift.” In addition to detecting model and concept drift, “companies should also review whether potential bias might have developed over time in a model that has already been trained,” Sivasubramanian says. “This can happen even though the initial data and model were not biased, but changes in the world cause bias to develop over time.” Demographic shifts across a sampled population may result in outdated results, for instance.

- Develop your team: Wide-scale training “is a critical aspect of achieving responsible AI, which combines AI adoption, AI ethics, and workforce development,” says Featheringham. “Ethical and responsible AI development and deployment depends on the people who contribute to its adoption, integration, and use. Humans are at the core of every AI system and should maintain ultimate control, which is why their proper training, at each leadership level, is crucial.” This includes well-focused training and awareness, says McClean. “IT leaders and staff should learn how to consider the ethical impacts of the technology they’re developing or operating. They should also be able to articulate how their technology supports the company’s values, whether those values are diversity and inclusivity, employee well-being, customer satisfaction, or environmental responsibility. Companies don’t need everyone to learn how to identify and address AI bias or how to write a policy on AI fairness. Instead, they need everyone to understand that these are company priorities, and each person has a role to play if they want to succeed.”

Ultimately, “creating AI solutions that work for humans also requires understanding how humans work,” says Nevala. “How can humans engaging with AI systems influence their behavior and performance? And vice versa. Critical thinking, navigating uncertainty and collaborating productively are also underrated yet key skills.”

https://www.zdnet.com/article/preparing-for-the-golden-age-of-artificial-intelligence-and-machine-learning/

/cloudfront-us-east-1.images.arcpublishing.com/gray/MZZ6VZA235A7XOAVDRAO3AOUWQ.jpg)