How AI-created textual content is poisoning the world wide web

This has been a wild calendar year for AI. If you have expended much time on the web, you’ve probably bumped into photos produced by AI techniques like DALL-E 2 or Stable Diffusion, or jokes, essays, or other textual content created by ChatGPT, the most recent incarnation of OpenAI’s massive language design GPT-3.

Sometimes it is apparent when a photograph or a piece of text has been established by an AI. But ever more, the output these designs generate can very easily fool us into thinking it was manufactured by a human. And big language types in specific are self-confident bullshitters: they develop textual content that appears correct but in point may possibly be full of falsehoods.

While that doesn’t make any difference if it’s just a little bit of enjoyment, it can have significant outcomes if AI versions are made use of to offer you unfiltered health information or provide other types of essential details. AI programs could also make it stupidly uncomplicated to generate reams of misinformation, abuse, and spam, distorting the info we take in and even our sense of actuality. It could be specially worrying around elections, for example.

The proliferation of these very easily obtainable huge language versions raises an critical issue: How will we know no matter if what we examine online is composed by a human or a device? I have just published a story looking into the instruments we currently have to place AI-created text. Spoiler notify: Today’s detection software package is woefully inadequate against ChatGPT.

But there is a extra severe extensive-term implication. We may possibly be witnessing, in authentic time, the delivery of a snowball of bullshit.

Big language designs are qualified on information sets that are created by scraping the online for textual content, such as all the harmful, silly, phony, destructive factors individuals have written on line. The finished AI styles regurgitate these falsehoods as reality, and their output is unfold just about everywhere online. Tech organizations scrape the net yet again, scooping up AI-composed textual content that they use to coach even bigger, a lot more convincing types, which individuals can use to create even additional nonsense prior to it is scraped yet again and once more, advert nauseam.

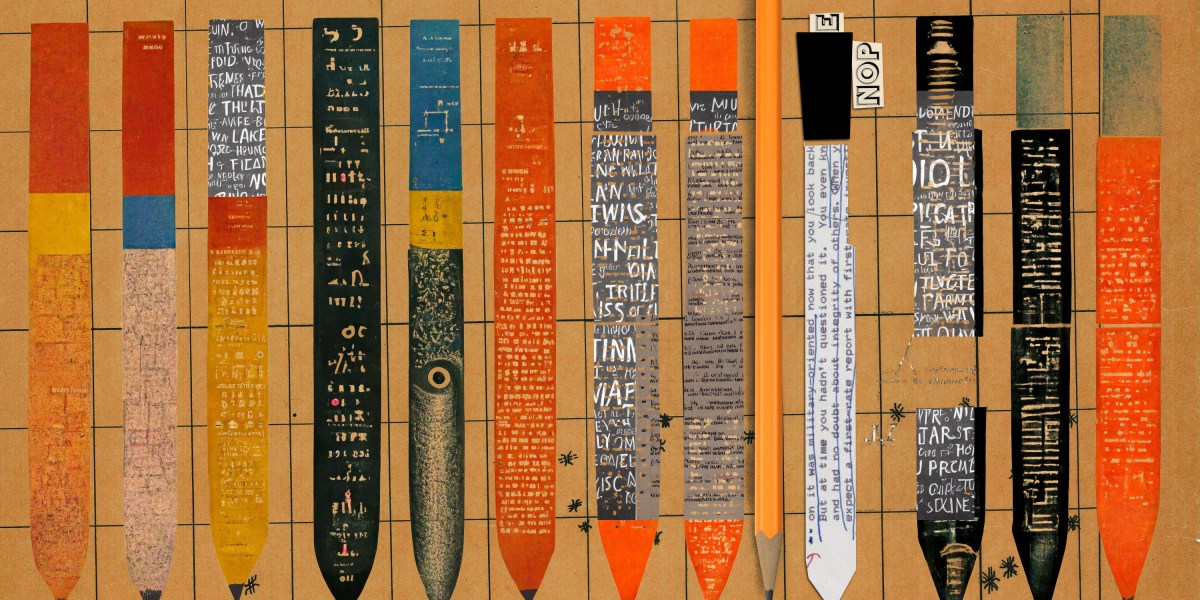

This problem—AI feeding on itself and manufacturing increasingly polluted output—extends to photos. “The net is now endlessly contaminated with pictures manufactured by AI,” Mike Cook dinner, an AI researcher at King’s Faculty London, explained to my colleague Will Douglas Heaven in his new piece on the long run of generative AI types.

“The pictures that we built in 2022 will be a portion of any model that is manufactured from now on.”